By Wilson Rothman

Me: "Alexa, microwave oatmeal."

Alexa: "OK, at what temperature?"

Me: "I don't know."

Alexa: "Hmm, I'm not sure."

I'm trying out a new Amazon-branded oven that's designed to be

easy to operate with simple Alexa voice commands.

While in many ways it is easy, and it can do some impressive

things, it exemplifies the problems we encounter when we try to

control too much with our voices. Does anybody know the temperature

at which they microwave oatmeal? Or even that microwaves had

temperature settings?

The oven does a lot -- it is also a convection oven and an air

fryer -- but when I said "cook salmon," Alexa asked how much. I

said a pound and was informed I can only cook "0.063 to 0.37 pound

of salmon." And while I can easily turn ON the convection oven with

my voice, I can't turn OFF the convection oven with my voice. (The

oven itself doesn't talk; you command it using a nearby

Alexa-powered speaker.)

Amazon says that many of the issues I encountered are being

fixed in background updates, but it'll be a long time before

conversations with voice assistants are wrinkle-free. It's almost

like practicing a foreign language before a trip: You memorize

certain phrases, but when you've used them up, you raise your voice

and start gesticulating wildly.

I've replaced many light switches with Wi-Fi-powered ones; other

lamps have connected bulbs. I have a talking speaker in many rooms,

including bathrooms, and yes, the Christmas tree lights are

connected to a smart plug. My family members all yell out commands

to Alexa, and for the most part she obeys. But we keep it

straightforward: Play this song, turn off these lights, set some

timer or alarm.

The same can be said for how we interact with Siri on our Apple

devices, or with the Google Assistant on the Nest Hub Max in our

kitchen.

"Voice is at its best when you are able to do something quickly,

in the flow of whatever you are doing," Ahmed Bouzid, chief

executive of the voice-first software developer Witlingo and a

former Alexa product head at Amazon, said in an email. The best

interfaces should require less effort than the commands they are

replacing.

In the case of Amazon's smart oven, Mr. Bouzid says he's

skeptical: If you're cooking, you're near your oven anyway and you

generally don't try to do other things while cooking -- so you

might as well push the button.

The counterargument, from Daniel Rausch, Amazon's vice president

of smart home, is that the oven has so many capabilities, no other

interface can hold them: "If you tried to draw a chart of all the

capabilities of those devices and wanted to put each on a button,

you'd need a roadside billboard-size panel for buttons," he

said.

So is that what voice assistants are meant to do, replace

switches and search boxes? The companies behind these interfaces

are constantly adding features to their assistants, some

surprisingly revolutionary. There is a lot you can do now if you

work at it. But due to a combination of factors, including privacy

considerations, it still feels like we're in a rut.

Talking Back

Routines are a way to combine various actions into one string,

so that a simple voice command like "I'm home" shuts off alarms,

turns on lights, adjusts thermostats and maybe kicks on some smooth

jazz.

In theory, this is cool; in practice, it's annoying -- because

to set it up you have to sit there and think about all of the

things you want to happen at once, and how to make it all

happen.

Now, apps that control these interfaces provide suggestions --

often based on your own actions.

Apple's version of this comes when you download an app called

Shortcuts. It isn't the easiest app to use, but if you open it and

tap Gallery, then look at Shortcuts from Your Apps, you might find

something useful. At night, I normally set three alarms -- "Wake

Up," "School Bus Pickup" and "Train." Now, I can just say "Morning

Alarms," and they are set for me.

But routines only solve the problem of too many buttons to push.

Developers are also making these interfaces more conversational,

allowing for follow-up questions. Maybe you say, "Turn on the porch

lights," and that happens, then your assistant might suggest, "Do

you want to turn on the patio lights, too?" Because that would make

sense.

While both Amazon and Google do suggest actions, Amazon's

"hunches" take it further: When you ask for the porch lights, it

might say, "Do you also want me to play smooth jazz?" The question

would be based around your (possibly unconscious) behavior: Often,

when you turn on porch lights, you also fire up the smooth

jazz.

Both Amazon and Google also allow you to momentarily do without

a wake word. By enabling Follow-Up in the Alexa app and Continued

Conversation in the Google Home app, you can wake your assistant,

then keep asking questions without repeating the wake word. It also

retains some of the context: "Alexa, what day is Christmas?" Then,

after it answers, you say, "What about Easter?"

Apple's Siri does this in different contexts. The AirPods Pro

now have an Announce Message feature, which reads messages and

allows you to respond in a conversational way. Walmart's

grocery-delivery app for iOS leverages Siri along with your

shopping history, so you can more easily pick out items with just

your voice, without memorizing key phrases.

Personalization and Privacy

What's really needed is a tighter bond between human and

disembodied voice, analysts that I spoke to said. Personalization

means recognizing who is talking and remembering their preferences.

But that requires data collection, and lately we've grown more

conscious of that.

"Privacy is in the forefront of consumers' minds. Companies like

Apple have done a lot of work on improving it," Simon Forrest,

principal analyst at Futuresource Consulting, Ltd., said. It has

become more possible for devices to hold information on the device

and recognize a particular voice when it requests a particular

movie, for instance.

The Alexa app provides a separate smart-home device history that

you can purge. Google also says it allows you to review and delete

your history.

"We are across the board thinking how we can have as little data

as possible while still improving the product for users," said

Lilian Rincon, senior director of product for Google Assistant. For

now, for quality reasons, most of what Google Assistant does

requires the cloud, she said. However, "we have a desire to put

more things on device."

Google's Pixel 4 phone is a remarkable example of this, as my

colleague Joanna Stern demonstrated earlier this year. It can

transcribe speech to text in real time using nothing but the

silicon inside the phone. The iPhone 11 is also doing more without

the cloud, such as rendering Siri's new voice, and even older

iPhones use on-device processing to monitor your behavior and

suggest actions based on it.

Discover Me

So why, with all this evolution, are we still mostly just

setting timers and asking for tunes? Unlike a new icon in your

favorite app, you can't really see what's new with voice

assistants. The Alexa, Google Home and Siri Shortcuts apps offer

loads of suggestions, as do the screen-equipped speaker devices

that Amazon and Google sell. But it really hasn't been enough to

train people up.

"One of the biggest problems we continue to have is the problem

of discovery, especially for speakers -- letting you know what it

is you can do," Ms. Rincon said.

Amazon's Mr. Rausch also acknowledged the discoverability

problem, using Jeff Bezos' famous phrase: "It's day one at Amazon,

definitely for Alexa and AI."

So what does day two -- or day 12 -- look like? Futuresource's

Mr. Forrest says even a voice-first interface might incorporate

technologies like gesture control and haptic feedback, like the tap

of an Apple Watch on your wrist. He sees "hearables," aka

supersmart AirPods, as a likely voice-first success.

Meanwhile, I'll be trying to talk this smart oven into cooking

more than 0.37 pound of salmon.

--For more WSJ Technology analysis, reviews, advice and

headlines,

sign up for our weekly newsletter

.

Write to Wilson Rothman at Wilson.Rothman@wsj.com

(END) Dow Jones Newswires

December 15, 2019 09:14 ET (14:14 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

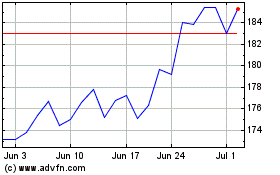

Alphabet (NASDAQ:GOOGL)

Historical Stock Chart

From Mar 2024 to Apr 2024

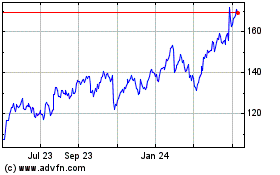

Alphabet (NASDAQ:GOOGL)

Historical Stock Chart

From Apr 2023 to Apr 2024