By Heidi Vogt

WASHINGTON -- Technology companies are racing to get ahead of

regulators to shape the future of artificial intelligence as it

moves deeper into our daily lives.

Companies are already working artificial intelligence, or AI,

into their business models, but the technology remains

controversial. So IBM Corp., Intel Corp. and associations

representing Apple Inc., Facebook Inc. and Alphabet Inc.'s Google

unit are seeking to set ethical standards, or a sort of code of

conduct, through alliances with futurists, civil-rights activists

and social scientists.

Critics, however, see it as an effort to blunt outside

regulation by cities, states or the federal government, and they

question if tech companies are best suited to shape the rules of

the road. For the corporations, the algorithms will be proprietary

tools to assess your loan-worthiness, your job application, and

your risk of stroke. Many balk at the costs of developing systems

that not only learn to make decisions, but that also explain those

decisions to outsiders.

When New York City proposed a law in August requiring that

companies publish source code for algorithms used by city agencies,

tech firms pushed back, saying they needed to protect proprietary

algorithms. The city passed a scaled-back version in December

without the source-code requirement.

"They're hiding behind trade secrets so you can't even get a

look at what people are doing," said Ryan Calo, a University of

Washington law professor who has advised Congress members on AI

issues.

AI, broadly speaking, refers to computers mimicking intelligent

behavior, crunching big data to make judgments on anything from

avoiding car accidents to where the next crime might happen.

Yet computer algorithms aren't always clear on their logic. If a

computer consistently denies a loan to members of a certain sex or

race, is that discrimination? Will regulators have the right to

examine the algorithm that made the decision? What if the algorithm

doesn't know how to explain itself?

The Obama administration sought to address these issues. Under

Mr. Obama, the Office of Science and Technology Policy issued white

papers on the ethical implications on AI. Under Mr. Trump, the

office still doesn't have a director, and its staff is down to

about 45, from about 130. A spokesman for the office declined to

comment.

For now, the Trump administration has signaled it wants business

take the lead. The administration is worried overarching regulation

could constrain innovation and make the U.S. less competitive,

Michael Kratsios, the deputy director of the Office of Science and

Technology Policy, said at a conference in February. He noted

China's push into artificial intelligence, which it is doing

without much ethical quibbling.

"We're not looking for, or will be endorsing, broad, high level

guidelines about AI rules," Mr. Kratsios said. He called it "key"

to create "the regulatory environment that ensures that the next

great technologies happen here in the U.S."

In the past six months, Intel, IBM, Workday Inc. and the

Washington, D.C.-based Information Technology Industry Council --

whose members include Facebook, Apple and Google -- all issued

principles on the ethical use of artificial intelligence. In

January, Microsoft Corp. put out an entire book on "Artificial

Intelligence and its Role in Society."

In 2016, some of the biggest tech companies founded an

ethics-setting organization called the Partnership on Artificial

Intelligence to Benefit People and Society, based in San Francisco.

There are many others with at least some industry funding,

including Open AI, the AI Now Institute, doteveryone, and the

Center for Democracy & Technology.

Proposed rules have ranged from specific guidelines for

government use, to requirements that any algorithm be able to

explain its process to consumers. Many would apply existing

regulations to AI case-by-case, adapting aviation rules to drones

or applying privacy protections to personal data in algorithms.

Setting rules is complicated because companies often can't

explain how their more complex systems, called "deep neural

networks," arrive at answers. Last year, University of Washington

researchers reported that an algorithm that had learned -- by

itself -- to distinguish between wolves and husky dogs appeared to

be doing so by noticing snow on the ground in the wolf pictures,

not because of any insight into the animal.

"Google, Facebook, those guys are very interested in performance

and making these programs as smart as they can," said Dave Gunning,

who leads a project into making algorithms explain their processes

for the government's Defense Advanced Research Projects Agency.

"Explainability is not as important to them," Mr. Gunning said.

Google and Facebook didn't respond to requests for comment.

At least one business executive, Elon Musk, founder and chief

executive of Tesla Inc. and SpaceX, has cut back his role in the

ethical research group he co-founded, Open AI. Mr. Musk quit the

group's board in February. The group said he wanted to "eliminate a

potential future conflict of interest" with his role at Tesla,

which is developing AI systems. He remains an Open AI adviser and

funder.

Some in Congress are taking up the ethical debate. Washington

Sen. Maria Cantwell and Maryland Rep. John Delaney, both Democrats,

led a bipartisan bill introduced in December to establish a federal

advisory council on the technology's potential impact. There is

also an AI caucus on the Hill, started in may, and committees have

been holding hearings on AI, algorithms and autonomous vehicles.

The European Union has already set regulations for AI algorithms,

that are set to take effect in May.

Human-resources software maker Workday, which uses Ai

algorithms, assumes that either now, or sometime soon, the U.S.

will start to set guidelines.

"Our engagement now is in anticipation that one day there will

be government regulation related to AI," said senior vice president

Jim Shaughnessy. He said Workday wants to "ensure a regulatory

framework that serves society's needs while allowing the potential

of AI, and its benefits, to flourish."

(END) Dow Jones Newswires

March 13, 2018 05:44 ET (09:44 GMT)

Copyright (c) 2018 Dow Jones & Company, Inc.

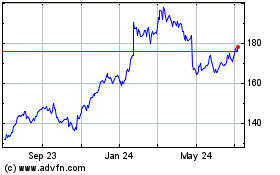

International Business M... (NYSE:IBM)

Historical Stock Chart

From Mar 2024 to Apr 2024

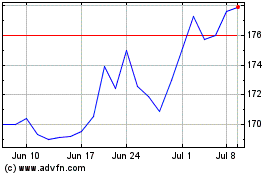

International Business M... (NYSE:IBM)

Historical Stock Chart

From Apr 2023 to Apr 2024