Facebook's Training A.I. to Spot ISIS, al Qaeda Posts -- Nazis Come Next

November 28 2017 - 7:29PM

Dow Jones News

By Sam Schechner and Deepa Seetharaman

Assailed for not doing enough to combat online misinformation

and extremism, Facebook Inc. says it is making big strides in one

area: removing propaganda posts and accounts from Islamic State and

al Qaeda.

The social media company said Tuesday that it has in recent

months focused on training artificial intelligence software to

identify extremist content specifically from those two terrorist

groups to hone its automated technology. Facebook says that has

boosted the speed with which it removes posts and accounts from the

groups compared with when it announced the initiative in June --

with 99% of the content it removes from the groups detected before

being flagged by Facebook users.

Now, Facebook executives acknowledge, comes the hard part:

translating some of that narrow success to a broader deluge of

extremist content, from white supremacist groups' pages to hate

speech posts -- or even Islamic State videos that sport a different

look to avoid the automated dragnet.

"One of the dangers there is that we're dealing with a nimble

set of organizations that frequently change the way that they

behave," said Brian Fishman, lead policy manager for

counterterrorism at Facebook. "We need to keep training our

machines so that they stay current."

Facebook is stepping up its counterterrorism activity at a time

when it and other tech firms are being buffeted on topics ranging

from their treatment of smaller rivals to their role in spreading

political misinformation.

In September, Facebook. admitted that Russian actors manipulated

its platform to sway American political discourse, later admitting

that roughly 126 million Americans saw at least one of more than

80,000 posts from pages spawned by the pro-Kremlin group Internet

Research Agency between June 2015 and August 2017.

Twitter Inc. and YouTube, a unit of Alphabet Inc.'s Google, have

also been under fire over the past year for allowing

misinformation, hateful speech and terrorist content to spread

across their platforms.

The deeper challenge for Facebook's push to use A.I. to counter

online extremism is that A.I. tools are only as good as the data

that are used to train them. Tech platforms like Facebook rely

heavily on software to sift through the reams of content posted to

their sites every day. But they need huge numbers of categorized

terrorist videos and pictures they can use to train their

algorithms what to look for -- something that might not always be

available.

Islamic State and al Qaeda "are prolific in creating content,

and it makes it easier to train" a machine to identify it, said

Monika Bickert, Facebook's head of global policy management. About

83% of known terror content, including images, videos or text

posts, are taken down within an hour of being posted on Facebook,

Ms. Bickert said.

Another roadblock is that many postings and pages, particularly

text postings, require judgments from content reviewers -- leading

the firm to increase their ranks. Facebook will employ 7,000

content reviewers by the end of 2017, up from 4,500 in May, Ms.

Bickert said.

Ms. Bickert said a subset of each content reviewer's decisions

are audited by Facebook every week to ensure quality and accuracy.

But she acknowledged that reviewers still make some mistakes.

"A beheading is easier to enforce than hate speech," Ms. Bickert

said. "Certain policies are easier to enforce than others."

Write to Sam Schechner at sam.schechner@wsj.com and Deepa

Seetharaman at Deepa.Seetharaman@wsj.com

(END) Dow Jones Newswires

November 28, 2017 19:14 ET (00:14 GMT)

Copyright (c) 2017 Dow Jones & Company, Inc.

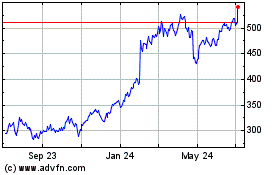

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Mar 2024 to Apr 2024

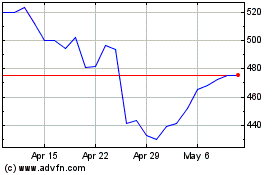

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Apr 2023 to Apr 2024