Facebook Inc. published on Tuesday new details on how it

conducts research using the personal information it collects on

Facebook users, amid a flurry of efforts to create privacy and

ethical standards for the corporate research involving human

data.

Facebook collects data on roughly 1.6 billion people, including

"likes" and social connections, which it uses to look for

behavioral patterns such as voting habits, relationship status and

how interactions with certain types of content might make people

feel. In June 2014, Facebook published a study about a

700,000-person psychological test to determine whether omitting

content with words associated with positive or negative emotions

could alter mood. The study sparked controversy about the company's

ethics.

In October 2014, Facebook had announced it was adding an

internal review process but declined to divulge specifics.

Because "the issues of how to deal with research in an industry

setting aren't unique to Facebook," the company decided to release

more details, said Molly Jackman, Facebook's public-policy research

manager and co-author of the paper published in Washington and Lee

Law Review.

To assess the ethical impact of each research effort, the Menlo

Park, Calif., company has established a five-person standing group

of Facebook employees, including experts in law and ethics.

The company declined to identify the five members of the board,

which can consult outside experts if deemed necessary.

If a manager determines that a research project deals with

sensitive topics such as mental health, the study gets a detailed

review by the group to weigh risks and benefits, as well as to

consider whether it is in line with consumers' expectations of how

their information is stored.

Managers have the authority to simply approve proposals that

they deem more innocuous. Which research gets a full review is up

to the discretion of the manager.

The review group is modeled on the institutional review boards,

or IRBs, that assess the ethics of human-subject research at

academic institutions. Facebook hired longtime Stanford University

IRB manager Lauri Kanerva, who co-wrote the paper, to oversee its

research review process.

The publication comes as tech companies are grappling with how

to ensure the ethical conduct of research using their customers'

personal data.

Dealing with research ethics is "definitely an emerging field

that everyone in the industry is struggling with," said Joetta

Bell, senior compliance program manager at Microsoft Corp., who led

the ethical review board that the company's Microsoft Research arm

launched in 2015.

The amount of information companies such as Facebook collect on

people enables their researchers to study a "deeper and broader"

cross-section of the population than ever before, said Jeremy

Birnholtz, a communications professor at Northwestern University

who worked at Facebook last year as a research fellow studying the

company's data.

Tech companies are increasingly hiring academics from fields

ranging from the life sciences to artificial intelligence to mine

social-media data, internet searches, purchasing behavior and

publicly available data sets to improve their products, place ads

and track health trends. Last week, for instance, Microsoft

published a study that suggests that search queries could provide

clues that a person might have cancer, even before a diagnosis. In

2013, the company used searches to detect adverse effects of

drugs.

Government officials are proposing changes to federal policies

that regulate human research, known as the Common Rule that would,

among other things, make it easier for research participants to

give scientists broad consent to use their data and tissue samples

for future studies.

But that regulation "doesn't apply to private companies like

Facebook, which don't accept any federal research funds," said

Michelle Meyer, the director of bioethics policy for the

Clarkson-Icahn School of Medicine at Mount Sinai bioethics

program.

"Certainly, tech companies have not had IRBs," which are often

regarded as bureaucratic, said Duncan Watts, a principal researcher

at Microsoft Research. But that is changing, he said.

Some, including gene-sequencing company 23andMe, hire an

external IRB to vet the majority of their research proposals,

according to 23andMe's vice president of research Joyce Tung. Its

review process has been in place since around 2008.

Microsoft and wearables maker Fitbit Inc. also contract with

external IRBs for some of their research projects. The rest are

largely reviewed internally. A subset of Fitbit's research

undergoes an ethics review described in a report published in

collaboration with the Center for Democracy and Technology in

May.

"Within the entire industry, there's a lot of people doing

experiments, and there's no visibility on how they're doing it,"

said Fitbit's vice president of research Shelten Yuen. "We wanted

to disseminate [our process]...so that startups wouldn't have to

reinvent this for themselves."

Write to Deepa Seetharaman at Deepa.Seetharaman@wsj.com

(END) Dow Jones Newswires

June 14, 2016 16:35 ET (20:35 GMT)

Copyright (c) 2016 Dow Jones & Company, Inc.

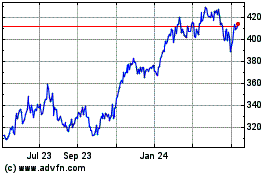

Microsoft (NASDAQ:MSFT)

Historical Stock Chart

From Mar 2024 to Apr 2024

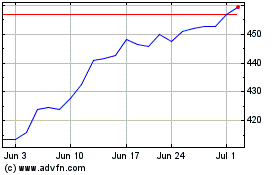

Microsoft (NASDAQ:MSFT)

Historical Stock Chart

From Apr 2023 to Apr 2024